The Craft of AI: Designing with care, complexity, and consequences

A designer's guide to creating good AI experiences for humans

Context: A few weeks ago I delivered a brief 15 minute talk on “The Craft of AI” as part of the AI Experience Salon held at the Hacker Dojo in Silicon Valley. I’m very grateful to Ranjeet Tayi & Mala Ramakrishnan for their invitation to be part of that exciting, impactful event. 🙏🏽 Below is a more elaborate essay version of that talk, with further details and links. Enjoy!

Let me introduce Maya, a talented mid-level software developer, who decides to try an AI code review tool. She's heard great things about AI's potential to catch bugs and improve code quality. So she submits her latest feature for analysis, expecting the streamlined experience so heavily promised in the marketing hype.

Instead, she's greeted by an endless spinner. No other feedback. No sense of what's happening behind the scenes. Just a black box that might as well be mocking her with its opacity…sigh. 😔

But here’s the thing: this wasn't a failure of AI performance. The model probably worked just fine. The analysis likely completed successfully. This was a failure of design—specifically, a failure to understand that building AI products requires a reframed approach to craft, beyond static screens.

AI is inherently a dynamic probabilistic entity, whose qualities are still being defined.

People need to be part of the picture.

📦 Beyond the prompt box: Why AI design differs

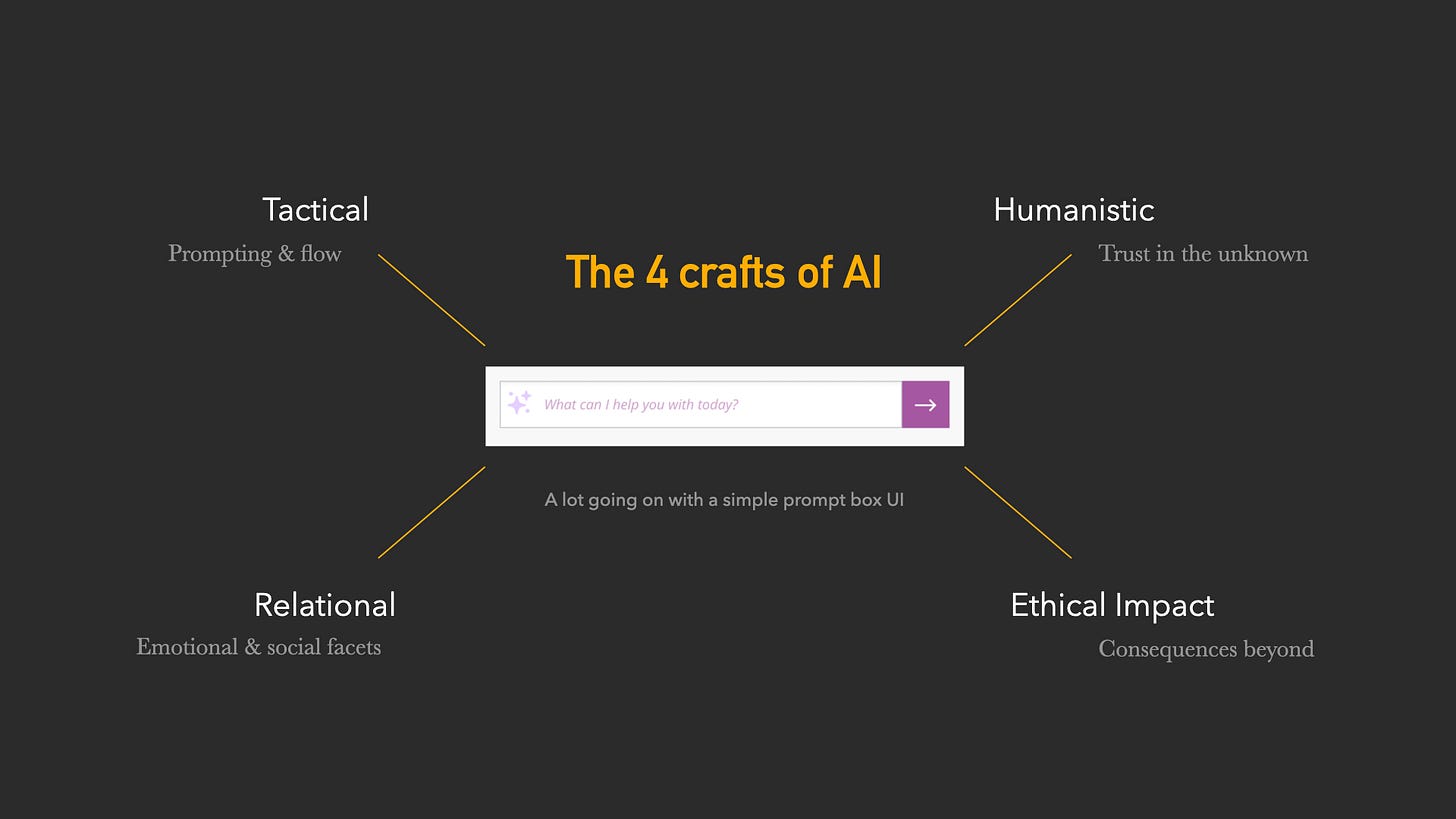

We've all seen the cool demos with the promise that natural language and massive models will unlock computational superpowers. ⚡️ Woo! But anyone who's shipped AI features knows the reality is far messier. 😬 Behind that deceptively simple prompt box or chat interface lies a tangled web of complexity that traditional UX patterns weren't easily meant to handle.

The challenge isn't just making AI work — it's making it work with humans in ways that feel natural, trustworthy, and genuinely useful. This involves what I call the four crafts of AI design, each addressing fundamental tensions that emerge when humans and AI systems interact with each other: tactical, humanistic, relational, and ethical.

These aren't some nice-to-haves or polishing points; they're essential to determine whether your AI product is indispensable or abandoned after the first (frustrating) encounter. Let’s take a closer look into each one…

🛠️ Tactical craft: Designing for AI limits

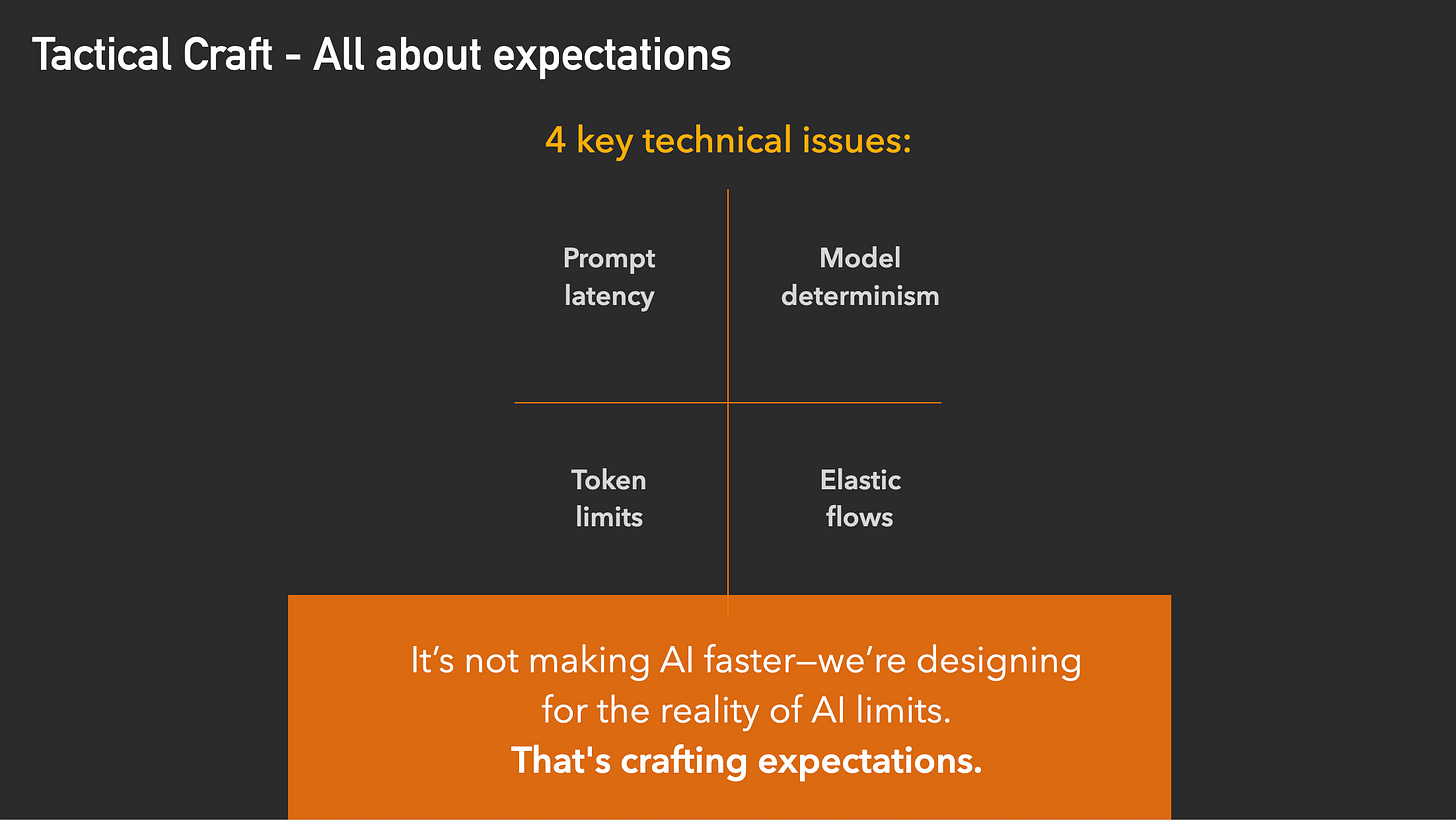

Let's start with the most immediately apparent challenges of prompt latency, model determinism, token limits, and elastic flows.

AI systems have certain characteristics that traditional software doesn't: they're unpredictable, resource-constrained, and prone to failures in ways that feel almost like a person (i.e., the “eager, but still learning intern” 😅). Tactical craft is about designing for these four particular realities instead of pretending they don't exist, by meaningfully applying visual design, information design, iconography, messaging, etc. so as to inform human expectations accordingly, and thus guide an effective, successful interaction.

Prompt latency → Design for anticipation

AI isn’t always instant! Each prompt or query can have varying levels of delay processing, from a couple seconds to well over 30-45 seconds or more. So avoid hiding behind indeterminate spinners. Instead, use staged, meaningful feedback to turn wait time into momentum. Stream in those early signals of what’s happening. Cultivate user trust. Build anticipation, not anxiety.Model determinism → Support variability

AI isn’t repeatably deterministic; the same prompt will often yield different results and across different types of models. Yet that’s not necessarily a flaw (if it’s not super mission-critical; more on that later), it’s a possible opportunity. Let users explore variations and apply their judgment & expertise. Think of it as a potential creative range, not just system instability.⚡️ Pro tip: Avoid using technical model names like “Lemur v1.45b” — instead it’s better to emphasize the benefit/value like “extended thinking, for multistep requests”. Remember: a human being who is not an engineer is using this.

Token limits → Make constraints visible

Models have limits to their prompt inputs, but don’t let users hit them blindly or haphazardly.1 Expose token usage maybe like a fuel gauge or other relatable ways to convey limits. It’s good to remember that tokens are an abstract concept to most folks, so try to leverage useful analogies. Offer options to retry/revise before the prompt token limit breaks, thus forcing users to start a whole new session — very frustrating. Prevent failure through clarity.Elastic flows → Design for partial success / graceful recovery

AI tools often give partial output with some hiccups or missteps. But don’t show some generic inscrutable system error; instead, show what did work. Recover gracefully with options to retrace steps or retry again, or let users steer and revise the prompts. Think resilience, not restart.

In effect, tactical craft isn't about making AI faster or more robust, per se. It's about designing for AI's inherently volatile, quirky, and dynamic characteristics rather than fighting them or forcing them a certain way. It's the difference between a tool that feels broken and one that feels thoughtfully considered, where humans hold a valued role in the interaction. 🙌🏽

Communicate with people using the AI tool, not just at them.

🙌🏽 Humanistic craft: Building trust in the unknown

Going back to the story of Maya using AI for a code review — her frustration wasn't just about waiting; it was about not knowing. She just couldn't see the AI's process, couldn't evaluate its reasoning, couldn't calibrate her trust appropriately. This kind of information asymmetry is one of the most challenging aspects of AI design. 😬

The user feels at the mercy of this machine, surrendering to its obliqueness. Not good at all, conveying a vibe of disrespect that suggests the user is inferior.

Traditional software has clear cause & effect. Click a button, get a result! AI introduces uncertainty at every level. How confident is this recommendation? What data influenced this output? Why this suggestion over alternatives? 🤔

Humanistic craft is about bridging this gap, helping the system feel trustworthy via transparency approaches. For example, show reasoning when possible, indicating confidence levels, and providing enough context for users to make informed decisions — or make changes, even revert & retry as needed.

This respects her as a person & collaborator, not just a recipient of some perceivably random output.

Consider how the following transforms Maya’s experience above. Instead of a black box analysis hiding behind an endless spinner, she sees:

"Found 3 potential issues based on common patterns in Python codebases. High confidence on the security vulnerability (line 47), medium confidence on the performance optimization (line 23)." [with techdoc sources cited for each line]Now she's not blindly accepting or rejecting suggestions—she's working with an AI system that has revealed its reasoning and confidence, and created an opening for her to demonstrate her agency to decide. This kind of calibration is crucial. We want people to trust AI enough to engage with it, but not so much that they stop thinking critically, especially in work productivity situations! 😆

The goal isn't to replace human judgment but to augment it.

⚖️ Relational craft: Designing AI relationships

OK here’s where it gets super interesting. 🙃

Over time, users don't just interact with AI — they naturally develop relationships with it, just like with mobile devices, services, and brands over time. A useful reference is the book 'The Meaning of Things' from 1981 by the psychologist Csikszentmihalyi, in which he interviewed families in Chicago to understand their relationship to common family objects around the house, like heirlooms or a small appliance. One of the more interesting interpretations is that the person's need to impute meaning to something in their environment — and thus in their lives — could be a matter of survival. So relationship-forming will happen, though we want to avoid deliberate anthropomorphizing, as humans shall do that naturally anyway! 😬

These relationships have emotional & social aspects that demand care and consideration, accordingly.

For example, our mid-level programmer Maya has initial frustration that's evolved into confidence as the AI proved its value. But more importantly, her expectations evolved too. This well-designed AI wasn't just a "speedy intern" churning out suggestions — it became a "critical colleague" that helped her think through complex problems. Hmm! What does that mean, in terms of expectations and also forming dependencies on the AI? I realize we might all consider ChatGPT our new BFF, but let's practice some self-awareness about what's really happening. 😉

This relational dimension requires careful thought beyond the screens. We need to avoid the anthropomorphism trap (designing AI that pretends to be human) while acknowledging that users will often naturally form social expectations about AI behavior. It’s a bit of an art or dance, factoring in content tone/language, visual symbolism (or not), and increasingly even audio and animated cues (for example, via Perplexity's voice mode). There may be some kind of personality definition that emerges through a user's interpretation and meaning-making, but in what ways and what are the boundaries to shape that?

The point is evolving consistency & reliability in the relationship, in addition to a sense of a personality.

For example, when the AI suggests something, does it always meticulously explain its reasoning, or start to blur things a bit with implicit cues? When it's uncertain, does it always acknowledge that uncertainty? In what tone of voice or diction? How is that conveyed to, and also determined by the user, if there’s space for that? These patterns build effective relationships over time and shape how users integrate AI into their workflows. Also, as users become more sophisticated, they may demand more sophisticated capabilities. 🤨

The relationship deepens, but so does the complexity of what users want to accomplish.

🧭 Ethical craft: Designing for consequences

The most complex, perhaps nebulous craft addresses what happens beyond the immediate interaction. Every AI system creates ripple effects --- some intended, others not. The ethical craft is about designing with intentional awareness of these broader consequences, but without getting locked into "analysis paralysis". It's to foresee, and get ahead of, potential negative impacts. Think of running various simulations to explore what emerges behaviorally, or drafting a "Black Mirror" style speculative fiction to probe possible paths.

Let’s go back to Maya’s evolution with the code review AI tool. After months of use, she reports feeling "uncomfortable shipping code without an AI review." Is this good or bad? It depends on your perspective and values!

On one hand, she's caught more bugs and improved code quality. On the other hand, has she become dependent on AI assistance? Are her own code review skills atrophying? 😬 Opportune convenience can become a sneaking reliance and then a strong impediment that hampers one’s own professional growth & development. The code output velocity is more efficient thanks to AI, but are the developers (skilled humans) likely regressing? And is that a worthwhile tradeoff? 🤔

Such questions don't have simple answers, but they require deliberate consideration during design. What guardrails can prevent over-dependence? How do we maintain user agency and skill growth while providing AI assistance?

Applying ethical craft can mean activities like stakeholder mapping (who is affected by this AI system?), assessing the power dynamics (who benefits versus who bears risk or harm?), and even temporal considerations (short-term gains vs. long-term consequences) to sharpen the tradeoffs to be made — and if they're worthwhile! There's even a short workshop called "consequence scanning" that has been recognized as part of SalesForce's own ethical AI maturity model; worth checking out!

Ultimately, this isn't about adding ethical thinking as an afterthought — it's about embedding it into the design process from the beginning.

💰 The business case for craft

Here's what every design leader must understand: the difference between working AI and well-crafted AI is enormous in business terms.

Working AI gets you demos and pilot projects. Well-crafted AI gets you adoption, retention, and the kind of user loyalty that drives sustainable growth. 🙌🏽 The cost of getting AI design wrong isn't just poor user experience — it's wasted engineering investment, missed market opportunities, and damaged trust that's hard to rebuild.

Another way to think of it — the tactical craft delivers immediate ROI through reduced support burden and higher completion rates. The humanistic craft drives long-term retention by building appropriate trust. The relational craft creates stickiness and deeper engagement. The ethical craft protects brand reputation and ensures sustainable growth that support people, cultures, communities and their values! What business leader wouldn’t want any of that? 😉

🤔 Questions for your team

As you think about your own AI initiatives, consider these questions:

Cross-functional ownership: Who owns each craft in your organization? UX typically handles the tactical and humanistic crafts, but the relational and ethical crafts require collaboration across disciplines. Where do you see gaps?

Measurement and iteration: How do you validate success across these crafts? Traditional UX metrics (completion rates, time on task) only capture part of the story. What new metrics matter for AI experiences?

Scalability: How do these principles apply when moving from prototype to production scale? The requirements often intensify as usage grows and user sophistication increases.

New roles and responsibilities: What new capabilities does your team need to build excellent AI experiences? This might involve new roles or new responsibilities for existing roles.

🛣️ The path forward

The craft of AI design is still emerging! This framework will likely evolve next year. We're all learning as we go, making mistakes, and discovering new patterns. But the teams that invest in developing these crafts early will have a stronger advantage as AI becomes ever more folded into digital experiences in various ways.

The goal isn't to become an AI expert overnight — it's to develop conscientious thoughtfulness to the novel problems AI introduces (creates?) and build systematic approaches to address them. Start with one craft, assess the impact, and expand from there…

The promise of AI design belongs to teams that can bridge the gap between AI's capabilities and human needs. That bridge is built through craft: deliberate, thoughtful, and constantly evolving approaches to design that honor both the power of AI and the richness of human experience. 🙏🏽✨

The question is: what story will your users tell about their AI experiences?

In AI, a “token” is “the smallest unit of text that an AI model can process. It serves as the fundamental building block for how the model understands and generates language. A token might be a single character, word, part of a word, or text chunk, varying based on the model's tokenization approach.” (definition per Google Gemini)